Solving the efficiency problem at the heart of AI

Our Switzerland-based investment fund focuses on investing in the technologies that can help to unlock an artificial intelligence revolution. Backed by prominent investors such as technologist Tej Kohli*, we invest across five verticals including machine learning, robotics, bionics, sensors, mapping and localization; and we also back frontier applications which combine AI with new innovations such as CRISPR.

The Rewired thesis is that applied science and technology ventures can power the new world economy whilst also doing good. But at the heart of this thesis there is also a problem: successful artificial intelligence too often comes at a significant financial and environmental cost. Now researchers are working on solving this problem, which lies at the heart of the oncoming AI revolution.

The real cost of training an AI model

Last year OpenAI unveiled the largest language model in the world, a text-generating tool called GPT-3 that can write creative fiction, translate legalese into plain English, and even answer obscure trivia questions. This feat of intelligence was achieved by deep learning, a machine learning method patterned to emulate the way that neurons in a human brain process and store information.

But this innovation also came at a hefty price: at least $4.6 million and 355 years in computing time, assuming that the model was trained on a standard neural network chip. The model’s colossal size — 1,000 times larger than a typical language model — was the main factor in its high cost.

According to Neil Thompson, an MIT researcher who has tracked deep learning’s unquenchable thirst for computing, this is unsustainable. At present a lot of computing power is needed just to get a little improvement in performance from machine learning models. This represents a major inhibitor to scaling up toward a world of omnipresent AI, with all of the benefits that it could bring to humanity.

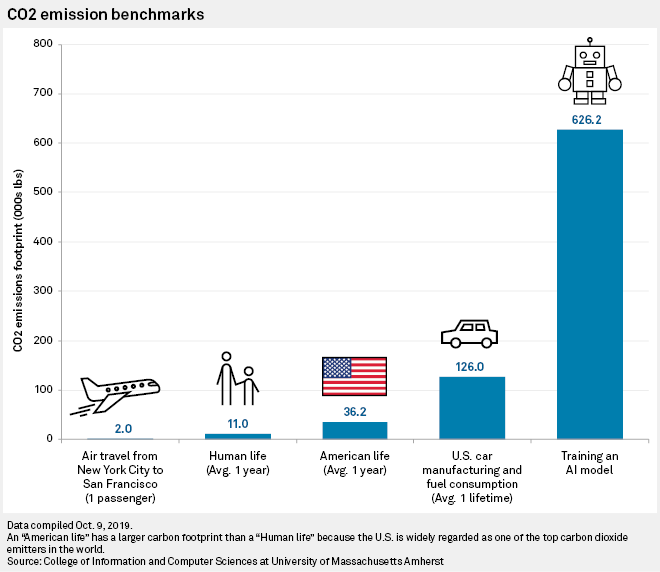

As the financial and environmental cost of training AI has become clearer, some early excitement about the prospects of a world of AI has changed to alarm. In a recent study, researchers at the University of Massachusetts at Amherst estimated that training a large deep-learning model produces 626,000 pounds of planet-warming carbon dioxide, equal to the lifetime emissions of five cars.

And compounding the problem is that fact that as models grow bigger, their demand for computing is outpacing improvements in hardware efficiency. Chips specialized for neural-network processing, like GPUs and TPUs, have offset some of the demand for more computing power, but not by nearly enough.

Computational limits have dogged neural networks from their earliest incarnation — the perceptron — in the 1950s. As computing power exploded, and the Internet unleashed a tsunami of data, they evolved into powerful engines for pattern recognition and prediction. But each new milestone also brought with it an explosion in cost, as data-hungry models demanded increased computation.

The path toward leaner, greener algorithms

The human perceptual system upon which many deep learning models attempt to model themselves is actually extremely efficient at using data. Therefore, part of the answer to the problem of ‘processing-thirsty’ AI may lie in deep learning models getting better at emulating the human brain.

Human brains don’t pay attention to every last detail, so why should deep learning models? We can instead use machine learning to adaptively select the right data, at the right level of detail, to make deep learning models more efficient and compact.

In a paper at the European Conference on Computer Vision (ECCV) in August 2020, researchers at the MIT-IBM Watson AI Lab described a method for training a ML model to unpack a scene from just a few glances, as humans do, by cherry-picking the most relevant data within that scene, rather than analysing every single aspect.

Researchers are using deep learning itself to design more economical models through an automated process known as ‘neural architecture search’. Song Han, an assistant professor at MIT, has used automated search to design more efficient deep learning models for language understanding and scene recognition, in particular in regard to automated cars, where picking out looming obstacles is important. They used an evolutionary-search algorithm to create a model that they say is three times faster and uses eight times less computation than the next-best method.

Researchers are also probing the essence of ‘deep nets’ to see if it might be possible to train a small part of even hyper-efficient networks. Under their proposed lottery ticket hypothesis, PhD student Jonathan Frankle and MIT Professor Michael Carbin proposed that within each model lies a tiny subnetwork that could have been trained in isolation with as few as one-tenth as many weights — what they call a “winning ticket.”

In less than two years, the “winning ticket” idea has been cited more than more than 400 times, including by Facebook researcher Ari Morcos, who has shown that winning tickets can be transferred from one vision task to another, and that winning tickets exist in language and reinforcement learning models, too. The only missing piece of the puzzle is designing an efficient way to find winning tickets.

Hardware designed for efficient algorithms

As deep learning pushes classical computers to the limit, researchers are inevitably now pursuing alternatives. Possible solutions range from optical computers that transmit and store data using photons instead of electrons; through to quantum computers that have the potential to increase computing power exponentially by representing data in multiple states all at once.

Until a new paradigm emerges, researchers have focused on adapting the modern chip to the growing demands of deep learning. The trend began with the discovery that video-game graphical chips, or GPUs, could turbocharge deep-net training with their ability to perform massively parallelized matrix computations. GPUs are now one of the workhorses of modern AI, and have since spawned a plethora of new ideas for boosting the efficiency of deep learning through specialized hardware.

Much of this work hinges on finding ways to store and reuse data locally, across the chip’s processing cores, rather than waste time and energy transmitting data to and from a designated memory site. Processing data locally not only speeds up model training, but also improves inference, allowing deep learning applications to run more smoothly on smartphones and other ‘mobile’ applications such as autonomous cars.

Other hardware innovators are focused on reproducing the brain’s energy efficiency. Inspired by the brain’s frugality, researchers are experimenting with replacing the binary ‘on-off’ switch of classical transistors with analogue devices that mimic the way that synapses in the brain grow stronger and weaker during learning and forgetting.

An electrochemical device, developed at MIT and published in Nature Communications, is modelled on the way that resistance between two neurons grows or subsides as calcium, magnesium or potassium ions flow across the synaptic membrane dividing them. The device uses the flow of protons in and out of a crystalline lattice of tungsten trioxide to tune its resistance along a continuum, in an analog fashion. Even though the device is not yet optimized, it gets to the order of energy consumption per unit area per unit change closer to that in the brain.

A new world of omnipresent AI

Energy-efficient algorithms and hardware can shrink the environmental impact of artificial intelligence. But there are also other reasons to innovate:

1) Efficiency will allow computing to move from data centers to edge devices like smartphones, making AI accessible to more people around the world.

2) Shifting computation from the cloud to personal devices reduces the flow, and potential leakage, of sensitive data.

3) Processing data on the edge eliminates transmission costs, leading to faster inference with a shorter reaction time, which is key for interactive driving and augmented/virtual reality applications.

For all of these reasons and more, we need to embrace efficient artificial intelligence if we are going to usher in a world of human-improving omnipresent AI.

* Tej Kohli is an investor in Rewired. This post is based on an article which first appeared here: https://news.mit.edu/2020/shrinking-deep-learning-carbon-footprint-0807