Explainer: How Rishi Sunak is trying to strike a balance on AI regulation

World leaders’ meetings like the G7, taking place in Japan right now, are not only about cringy photo ops and bilateral diplomatic statements. They’re also an opportunity for politicians to set out strategies and deliver messages to their foreign counterparts and their own domestic audience.

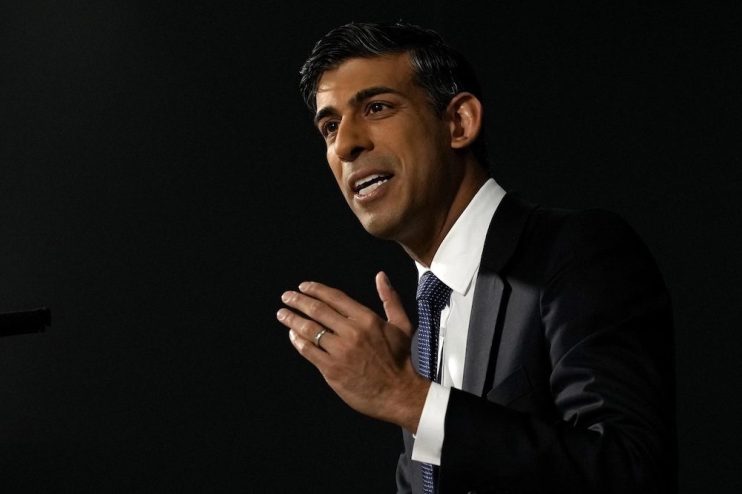

Rishi Sunak has proven a sleek player on the foreign stage so far, contrary to what many expected when he became prime minister. He knows how these things work. So it’s not a coincidence that he chose the G7 to suggest his approach to AI regulation will be tilting a bit more towards control and a bit less towards a free-for-all marketplace in the near future.

The prime minister admitted the UK might need to work on new regulations to tackle AI – implying the government might have to look again at its fairly laissez-faire approach.

Back in March, the government set out its strategy on artificial intelligence. It said AI companies would have to follow five “principles”, rather than laws, when developing technology. Regulators would then have to set out the rules of the game.

There are some obvious issues with this approach. Relying on principles rather than laws instantly moves the matter into the realm of subjectivity, making it overly complex to regulate. The principles include fairness, accountability and governance. But how do you define fairness? And who decides what is fair and for whom, in the world of technology?

Then there’s the problem of dividing tasks. The regulators considered by the government for the job of overseeing AI are the Health and Safety Executive, the Information Commissioner’s Office, the Competition and Markets Authority, Ofcom and the Medicine and Healthcare Products Regulatory Agency. They all have different areas of expertise and experience, and there will be many cases where they’ll have to collaborate when AI touches on their remits. Regulators don’t always tend to work together very well.

A clear logic sits behind this strategy. The government thinks favouring a pretty unregulated environment will lure into the UK market lucrative AI companies, tech startups, and other businesses who find the regulatory environment within the EU too tight. These companies will bring innovation, stoking growth and making money. Many big business voices have been supportive of this approach, and for a reason: the AI industry contributed £3.7bn to the UK economy last year, employing more than 50,000 people.

But in the meantime, several AI experts and industry insiders have come out warning of the perils of letting AI grow unchecked – with their own descriptions of threats to the security of our businesses, and of our democracies. It’s always hard to strike a regulatory balance when new technologies arise: you want regulation to be flexible, so that it can be modified as the technology develops, but you also want strong safeguards in case something goes very wrong.

Sunak’s comments at the G7 show the UK government is at least recalibrating. He’ll stand alongside Ursula von der Leyen, the president of the European Commission, to call for greater “guardrails” over AI development. He might have finally understood that on something like AI, international cooperation is an imperative rather than a choice.